Opinion | Take a breath: Michigan schools’ tests scores aren’t as bad as you think

Thirty-two percent of Michigan’s fourth-graders score at the seventh-grade level on the National Assessment of Educational Progress test.

Did you miss that headline recently? I did, too.

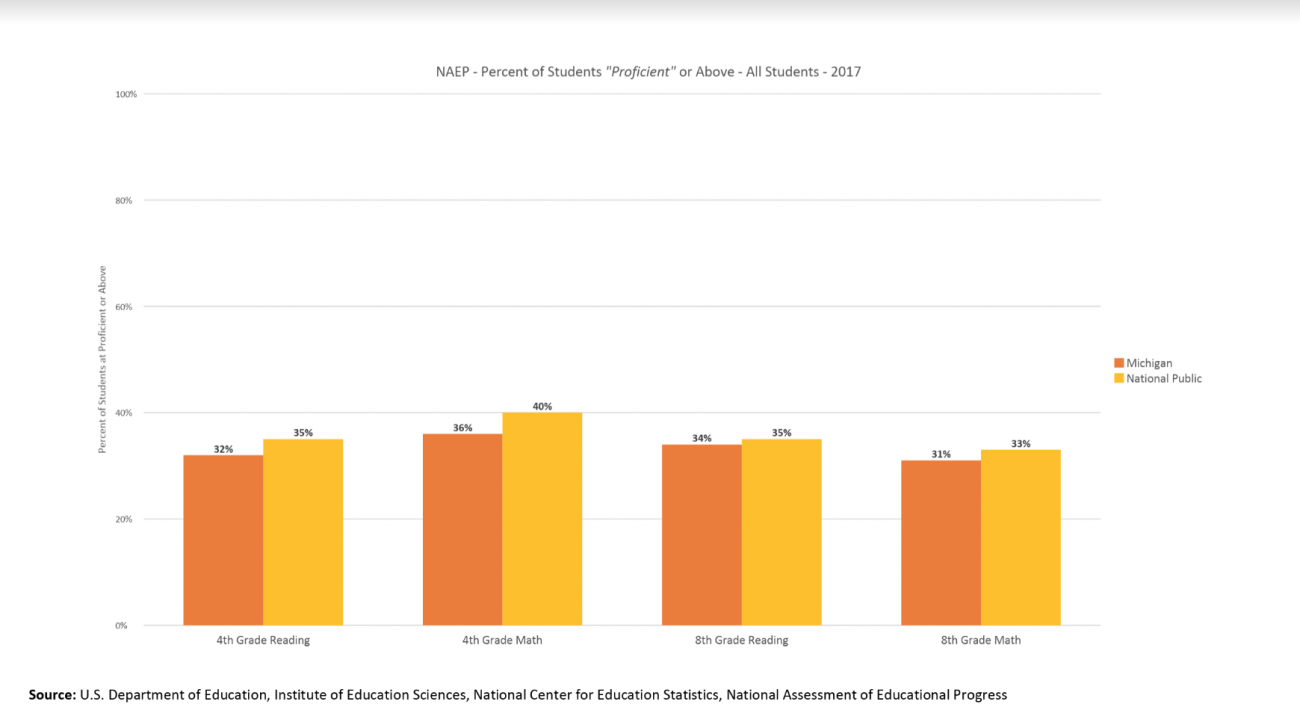

That’s because it wasn’t reported. Instead, Michigan’s schools and students were slammed by pundits because just 32 percent were “proficient” on the NAEP. To be proficient at the fourth-grade level, students must answer seventh-grade questions.

If you think that is confusing, or just doesn’t sound right, join the tens of thousands of educators who think the same thing.

It was reported – and is commonly accepted – that Michigan’s schools are failing and falling behind based on the NAEP scores released April 10. What is not reported is what the NAEP scores measure and, more importantly, what the student performance measures represent.

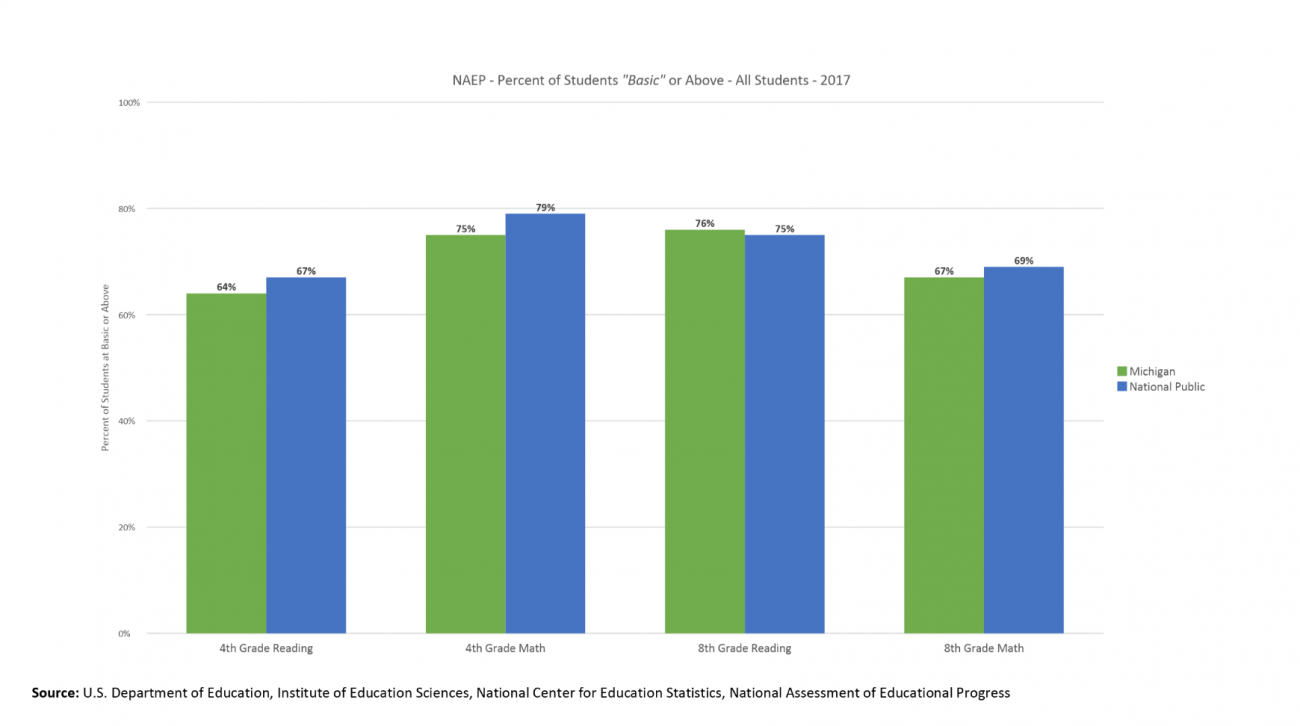

Scores on the NAEP are measured on a three-part scale, according to the National Center for Education Statistics (NCES), the federal entity that administers the NAEP. Student performance is reported as “basic,” “proficient,” and “advanced.” What do those words mean to you? With the release of the 2017 results, the associate commissioner of NCES, Dr. Peggy Carr, admits that looking at whether students are “basic” rather than “proficient” is a better indicator of students meeting grade-level standards.

Unfortunately, the NAEP scores are never reported in that fashion. Proficiency, and the percentage of students who perform “above” grade level, is the coin of the realm. Researcher James Harvey, in his Education Leadership article the Problem with Proficient, reports the bar is so high that few nations in the world – as with our highest performing states ‒ would report more than 50 percent proficient if their students took the NAEP.

In Michigan, if students performing at grade-level were the measure, the percentage of students meeting this benchmark would be much higher. Again, these rates don’t vary much from the nation either:

We are a victim of what researchers have dubbed misNAEPery.

Here are some additional considerations regarding the recently released NAEP scores:

Small differences among states make rankings problematic

NAEP administers its assessment to a small sample of students in each state—much like public polling. Like public polling, there is always a margin of error associated with the results. And because we know scores between states can be similar, these margins can make a big difference.

For example, in fourth-grade reading, 19 states have results that are not statistically different than Michigan’s average. In fact, across three of the four subjects and grade-levels, there is no statistically significant difference between the national public average and Michigan’s average. Moreover, there is no statistically significant difference between Florida and Michigan’s scores in eighth-grade reading and math—despite Florida having been heralded as a national “bright spot” by U.S. Secretary of Education Betsy DeVos.

Improvements in scores over two or more years is not the same as student growth

Because NAEP is administered every two years, many misinterpret the changes in scores as student growth. Student growth refers to the improvements or declines for a single student or cohort of students over two or more points in time. NAEP, however, does not administer the assessment to the same students over time. In fact, the students sampled don’t even take the full assessment, instead complete a sub-portion using a technique known as “matrix” sampling. This is to the frustration of local school leaders, as NAEP’s assessment design prevents local schools from receiving their own results.

Causation is not the same as correlation

Differences in NAEP scores can be due to a countless number of factors, ranging from variations in poverty levels to changes in policies. However, the temptation to jump toward conclusions—particularly over short-term changes—is often too hard to suppress. This exact scenario may be playing out in Tennessee. In 2013, the state was lauded as the “fastest improving” in the nation as a result of its massive education reforms in previous years. Unfortunately, this didn’t carry into subsequent years, with 2017 results being described as “disappointing” by local stakeholders.

Given these complexities, should we toss out NAEP altogether? No. NAEP provides states with a general sense of how their students are performing. It can also open the door for further investigation of trends and the areas worth monitoring.

But scoring states, and scaring policy-makers and parents with scores and stories that make it appear as though their schools are failing, does a disservice to our students, educators, schools and districts. There is much improvement to be made, and Michigan’s schools must get better if we are to restore our mitten to the economic powerhouse it once was. The improvements to be made are much more manageable if we are honest about our scores and our shortcomings.

See what new members are saying about why they donated to Bridge Michigan:

- “In order for this information to be accurate and unbiased it must be underwritten by its readers, not by special interests.” - Larry S.

- “Not many other media sources report on the topics Bridge does.” - Susan B.

- “Your journalism is outstanding and rare these days.” - Mark S.

If you want to ensure the future of nonpartisan, nonprofit Michigan journalism, please become a member today. You, too, will be asked why you donated and maybe we'll feature your quote next time!